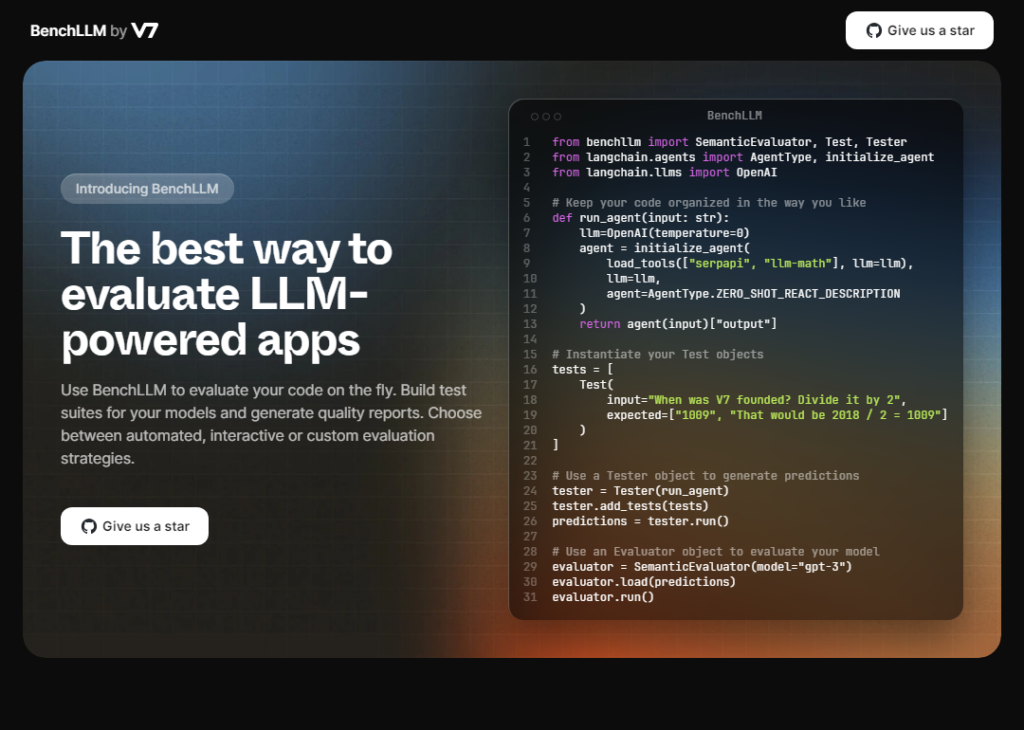

Generated by Gemini:BenchLLM is a Python-based open-source library that streamlines the testing of Large Language Models (LLMs) and AI-powered applications. It measures the accuracy of your model, agents, or chains by validating responses on any number of tests via LLMs.

BenchLLM implements a distinct two-step methodology for validating your machine learning models:

- Testing: This stage involves running your code against any number of expected responses and capturing the predictions produced by your model without immediate judgment or comparison.

- Evaluation: The recorded predictions are compared against the expected output using LLMs to verify factual similarity (or optionally manually). Detailed comparison reports, including pass/fail status and other metrics, are generated.

BenchLLM is a powerful tool for anyone who is developing or using LLMs and AI-powered applications. It can help you to ensure that your models are producing accurate and reliable results.

Here are some of the benefits of using BenchLLM:

- Improved accuracy: BenchLLM can help you to identify and fix errors in your LLMs, which can lead to improved accuracy in production.

- Reduced development time: BenchLLM can help you to automate the testing process, which can save you a lot of time.

- Increased confidence: BenchLLM can help you to have confidence in the results of your LLMs, which can lead to better decision-making.

If you are developing or using LLMs and AI-powered applications, I highly recommend checking out BenchLLM. It is a powerful tool that can help you to improve the accuracy and reliability of your models.