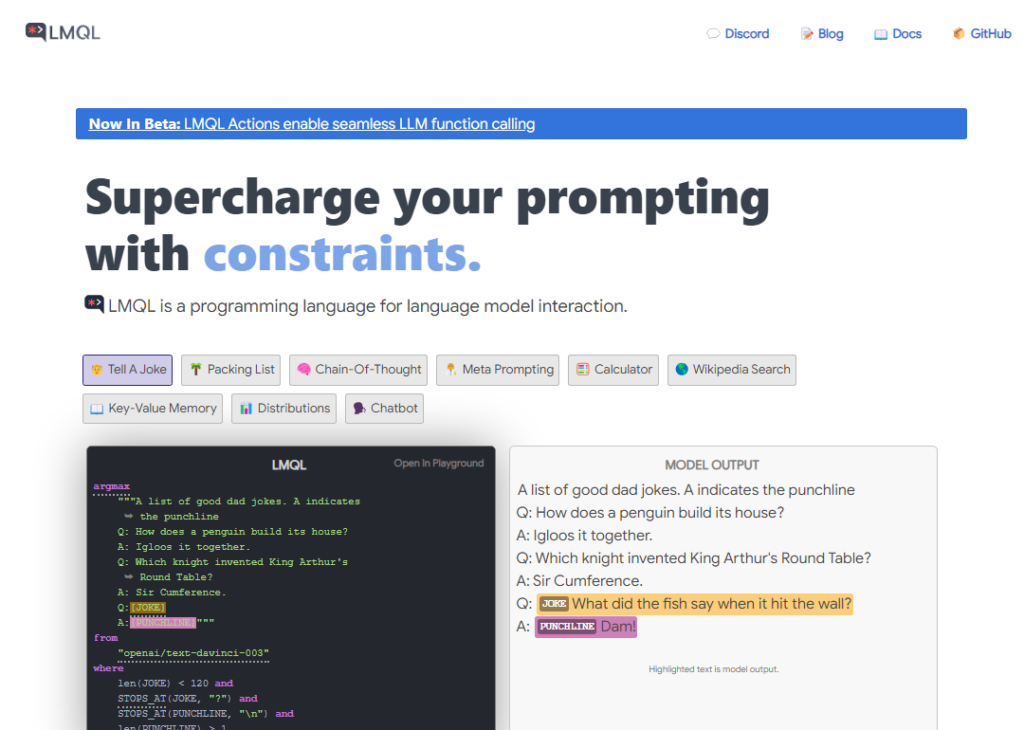

Generated by Gemini:LMQL stands for Large Model Query Language. It is a programming language that is specifically designed for interacting with large language models (LLMs) like Bard. LMQL makes it easier to control the generation process of LLMs and to get the desired results.

LMQL is a declarative language, which means that you tell the LLM what you want it to do, but not how to do it. This makes it easier to use LMQL, even if you are not familiar with the underlying implementation of the LLM.

LMQL also supports a variety of features that can be used to improve the quality and efficiency of LLM generation, such as:

- Prompt engineering: LMQL allows you to use prompt engineering techniques to guide the LLM towards generating the desired output. For example, you can use prompts to provide the LLM with context, examples, and constraints.

- Control flow: LMQL supports regular Python control flow statements, such as loops and conditional statements. This allows you to control the generation process programmatically.

- Efficiency: LMQL uses novel, partial evaluation semantics to evaluate and control the LLM decoding process on a token level. This can lead to significant efficiency gains over existing approaches.

Overall, LMQL is a powerful and flexible language for interacting with LLMs. It makes it easier to control the generation process and to get the desired results.

Here are some of the benefits of using LMQL:

- Easier to use: LMQL is a declarative language, which makes it easier to use than other methods of interacting with LLMs.

- More powerful: LMQL supports a variety of features that can be used to improve the quality and efficiency of LLM generation.

- More flexible: LMQL can be used to interact with a variety of different LLMs.

If you are using LLMs in your work, I encourage you to check out LMQL. It is a powerful and flexible tool that can make it easier to get the most out of LLMs.