Generated by Gemini:OpenAI has officially launched Sora, a text-to-video AI model that enables users to generate videos from text prompts. Sora can create videos up to one minute long, maintaining high visual quality and closely adhering to the provided prompts.

Sora, developed by OpenAI, represents a significant advancement in AI-driven video generation. Here’s an in-depth look at Sora, based on the latest available information:

Overview:

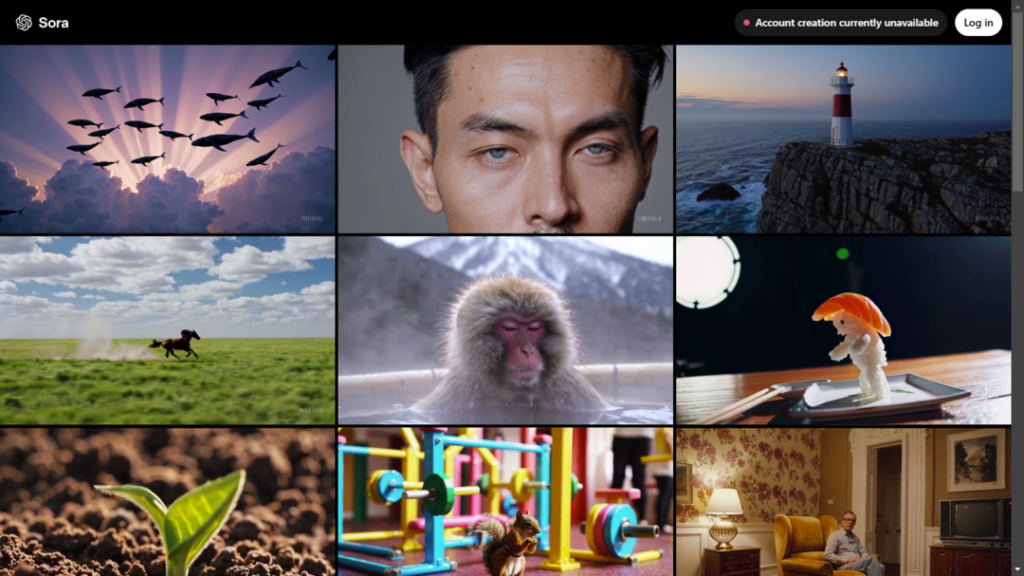

- Product: Sora is an AI model that generates high-quality videos from text prompts, capable of creating scenes with complex elements, multiple characters, and detailed environments.

Key Features:

- Text-to-Video Generation: Users can input text descriptions to generate videos up to one minute long, showcasing its ability to handle detailed and imaginative prompts.

- Video Quality: Produces videos in resolutions up to 1080p, with capabilities to render realistic physics, lighting, and motion, although it has some limitations in simulating complex physics or maintaining perfect spatial consistency.

- Remix and Editing: Offers a “Remix” feature where users can modify existing videos or images by providing additional text prompts, adjusting elements within the scene.

- Storyboarding: Allows for the creation of multi-shot videos from a series of text prompts, aiming for narrative consistency across scenes.

- Safety Features: Includes restrictions on content generation, such as prohibiting the creation of videos involving sexual content, violence, hate speech, or copyrighted material.

Accessibility and Availability:

- Launch: Sora was made publicly available starting December 9, 2024, for ChatGPT Pro and Plus subscribers, though not immediately in the EU and UK due to regulatory compliance.

- User Interface: Sora operates via a web platform at sora.com, where users can generate, save, and organize videos into folders.

Technical Insights:

- Model Architecture: Utilizes a combination of diffusion models and transformer architecture to ensure both detail in video generation and coherence in scene composition.

- Training Data: Trained on a diverse set of public and licensed videos, though specifics about the dataset are not fully disclosed.

Applications and Use Cases:

- Creative Content: Ideal for filmmakers, artists, and content creators looking to visualize concepts or create short films.

- Education and Research: Potential use in educational settings for creating dynamic visual aids or in research for simulating environments.

- Marketing and Advertising: Offers a tool for quick, customized video content creation for campaigns.

Challenges and Criticisms:

- Realism and Physics: While advanced, Sora still struggles with accurately simulating complex physical interactions or maintaining object permanence over time.

- Ethical Concerns: There’s ongoing debate about AI-generated content, including issues of copyright, misinformation, and the displacement of human creatives.

- Privacy and Safety: With the ability to generate realistic video content, there are concerns about misuse for creating deepfakes or misleading content.

Community and Feedback:

- Artist and Developer Engagement: OpenAI sought feedback from a select group of artists, filmmakers, and red teamers to refine Sora before its broader release.

- Public Reaction: There’s been a mix of excitement for the creative possibilities and concern over implications for traditional content creation industries.

Citations:

- Information about Sora’s features, launch, and capabilities has been covered extensively by tech news outlets like The Verge, TechCrunch, and through OpenAI’s official announcements.

Sora represents OpenAI’s venture into video generation, pushing the boundaries of AI’s capabilities in multimedia content creation. However, like all pioneering tech, it comes with a set of challenges that need addressing as it integrates into broader use.