Popular Alternative :

Currently not enough data in this category. Generated by Gemini:StyleTTS 2 is a significant advancement in text-to-speech (TTS) synthesis technology, focusing on achieving human-level speech synthesis through novel approaches like style diffusion and adversarial training. StyleTTS 2 is an advanced text-to-speech (TTS) model that utilizes style diffusion and adversarial training with large speech language models (SLMs) to achieve human-level speech synthesis. Unlike its predecessor, StyleTTS, which required reference speech to model styles, StyleTTS 2 treats styles as a latent random variable through diffusion models. This approach enables the generation of appropriate speech styles directly from text, eliminating the need for reference audio and allowing for efficient latent diffusion while benefiting from the diverse speech synthesis capabilities of diffusion models

-

Overview:

-

Development: StyleTTS 2 is developed by researchers including Yinghao Aaron Li, with contributions from institutions like Columbia University and Tsinghua University.

-

Objective: The model aims to produce speech that rivals or surpasses human recordings in terms of naturalness and expressiveness.

-

-

Key Features:

-

Style Diffusion: Unlike its predecessor, StyleTTS 2 models styles as latent random variables using diffusion models, enabling the generation of the most suitable style for given text without needing reference speech. This approach allows for efficient latent diffusion, benefiting from the diversity of speech synthesis diffusion models offer.

-

Adversarial Training: Utilizes large pre-trained Speech Language Models (SLMs) like WavLM as discriminators, combined with a novel differentiable duration modeling, for end-to-end training. This results in enhanced speech naturalness.

-

Performance: It has been shown to outperform human recordings on the single-speaker LJSpeech dataset and match human performance on the multi-speaker VCTK dataset, as evaluated by native English speakers.

-

-

Applications:

-

High-Quality TTS: Suitable for applications requiring natural-sounding voiceovers, such as audiobooks, virtual assistants, video narration, and accessibility tools.

-

Voice Cloning: With the ability to adapt to different speakers without specific training on their voice, it's particularly useful for zero-shot speaker adaptation.

-

-

Technical Aspects:

-

Model Architecture: Incorporates BERT for text encoding, a style encoder for capturing voice characteristics, and a generator for speech synthesis. The style diffusion process is key to generating varied and appropriate speech styles.

-

Training Data: Trained on datasets like LJSpeech, VCTK, and LibriTTS, with a focus on achieving good performance in both single and multi-speaker scenarios.

-

-

Accessibility and Use:

-

Open-Source: The codebase is available on GitHub, allowing developers and researchers to explore or build upon the technology.

-

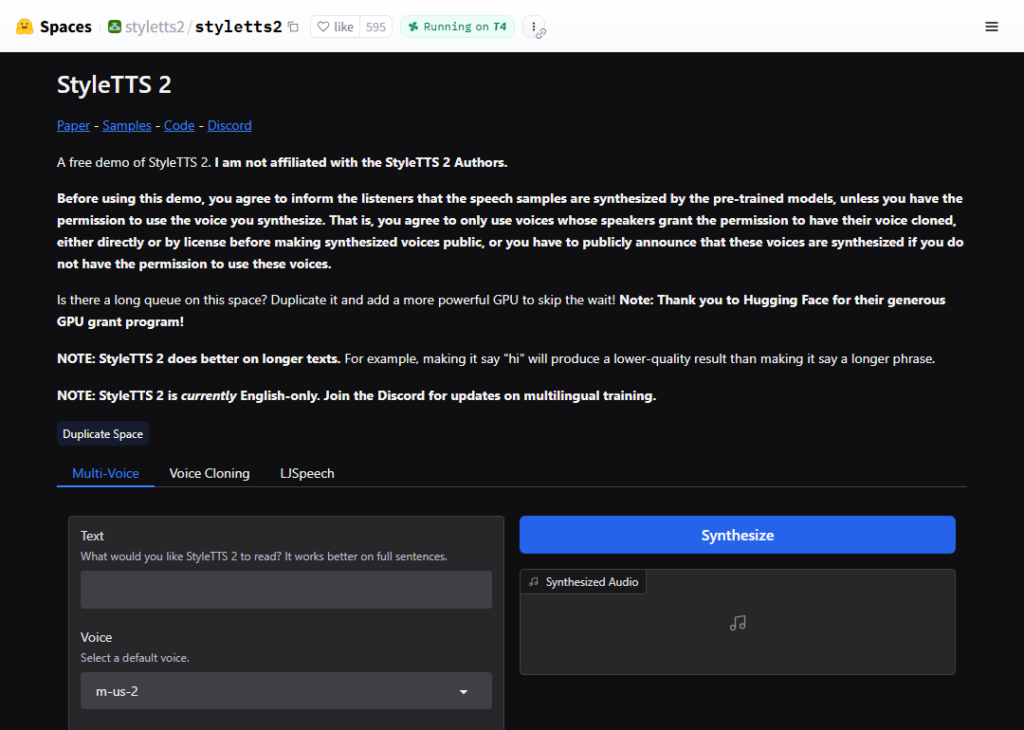

Pretrained Models: Pre-trained models are available for download, often from platforms like Hugging Face, enabling quick deployment for testing or integration.

-

-

Community and Development:

-

Active Research: There is ongoing work to improve and extend StyleTTS 2, with discussions and shared experiences in forums like Reddit and GitHub issues.

-

Implementation: There are Python packages and inference modules available that make using StyleTTS 2 more accessible, even for those without extensive machine learning backgrounds.

-

-

Challenges and Future Directions:

-

Resource Intensity: While efficient compared to some previous models, running StyleTTS 2 still requires significant computational resources, particularly for training from scratch.

-

Generalization: Improving the model's generalization across different accents, languages, and emotional tones remains an area of focus.

-

-

Notable Resources:

-

Papers: The original research paper titled "StyleTTS 2: Towards Human-Level Text-to-Speech through Style Diffusion and Adversarial Training with Large Speech Language Models" provides a deep dive into the methodology.

-

Demos and Samples: Audio demos are available to assess the model's output, showing its capability across various texts and styles.

-

For those interested in exploring StyleTTS 2 further, checking the official GitHub repository or related academic papers would provide the most current and detailed information. Remember, the technology in AI TTS is rapidly evolving, so for the latest updates, keep an eye on academic publications, community forums, and developer blogs.

StyleTTS 2: Towards Human-Level Text-to-Speech through Style Diffusion

StyleTTS 2: Towards Human-Level Text-to-Speech through Style Diffusion and Adversarial Training with Large Speech Language Models https://github.com/yl4579/StyleTTS2 Installation steps https://drive.google.com/file/d/1VyyVJfGaFmcURw3zDnzYDhMZTwXp8DaF/view?usp=sharing #ai #StableDiffusion #TechInnovation #ArtificialIntelligence #DeepLearning #AIExploration #TechEnthusiast #CreativityInAI #StableAIHub #AICommunity #InnovationHub #TechBreakthroughs #AIResearch #futuristictechnology #tts #text2speech #texttospeech